Appearance

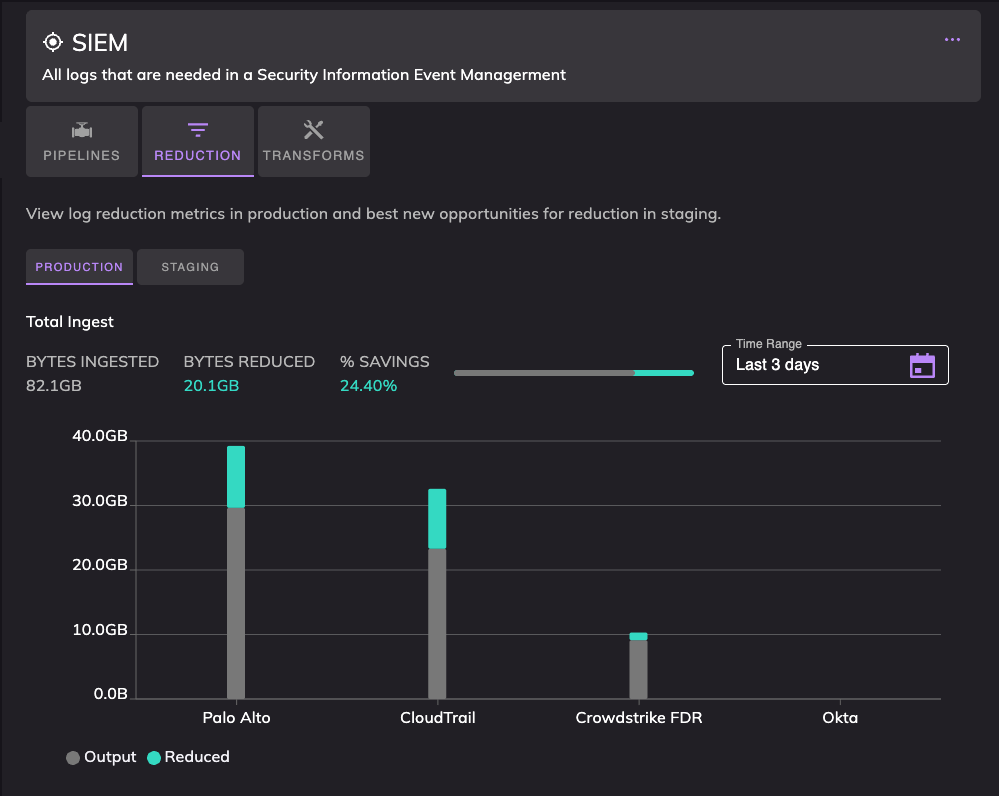

Realm Focus - Log Reduction

Overview

Realm’s reduction engine is a core component of the platform. It is designed to minimize the volume of low-value, noisy data sent to your SIEM and other security tools. By intelligently filtering irrelevant logs while retaining critical security telemetry, you reduce ingestion costs and improve the signal-to-noise ratio for your SOC.

The reduction process ensures the right data reaches the right destination at the right time, keeping both your infrastructure and security operations efficient.

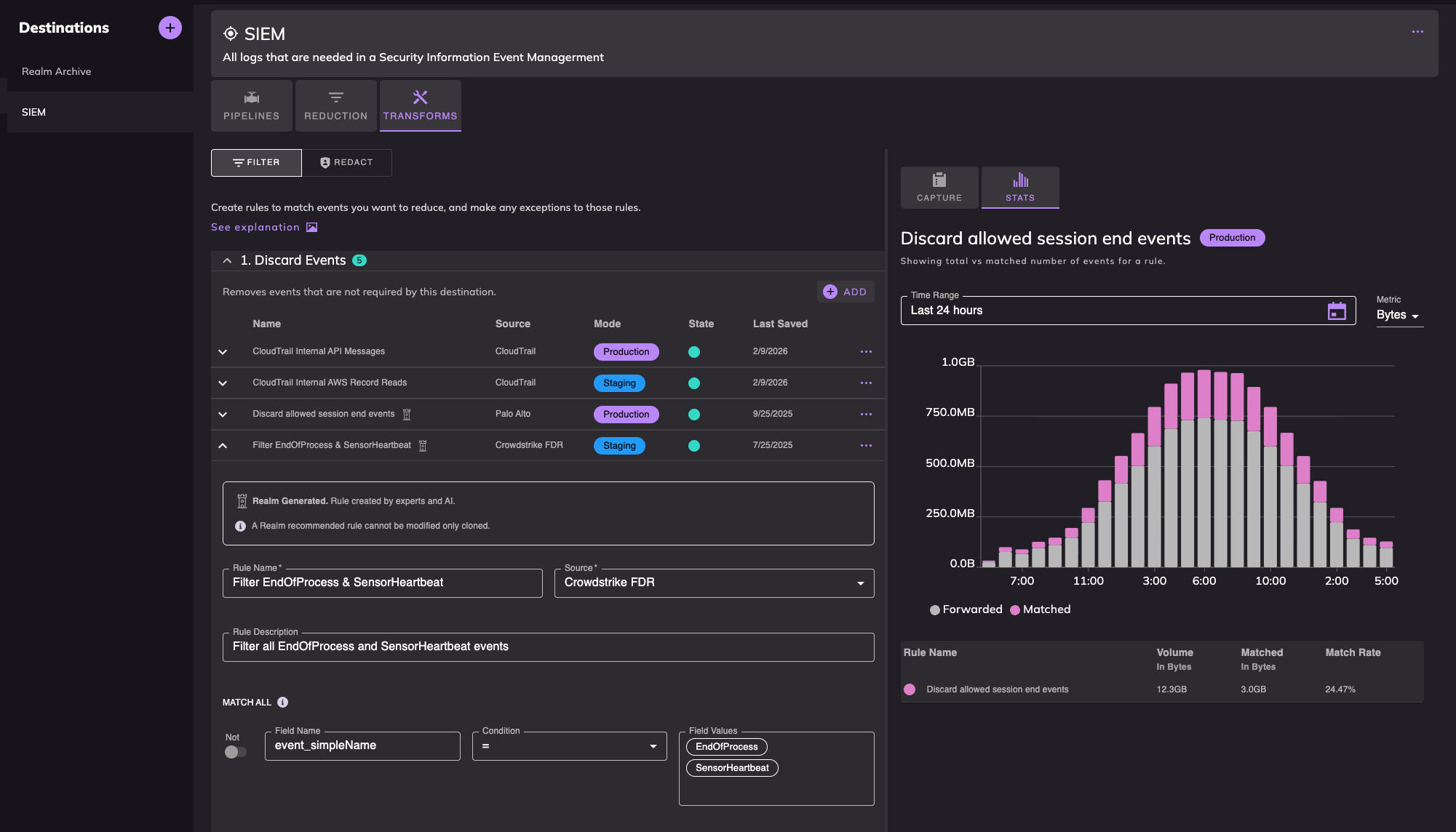

Realm Recommended Rules

To accelerate your time-to-value, Realm provides personalized reduction recommendations generated by the Realm Refinery—our proprietary data science engine.

Unlike generic "Out-of-the-Box" rules, Refinery recommendations are:

- Personalized: Generated based on the specific telemetry patterns and noise profiles found in your unique environment.

- Expert-Vetted: Each recommendation is curated and analyzed by both our AI models and our in-house security research team before being presented.

- Evidence-Based: Every recommendation includes a match rate, showing exactly how much data would be reduced if the rule were active.

Rule Lifecycle: Staging vs. Production

Realm prioritizes operational stability. To prevent accidental data loss, every rule (whether custom-built or Refinery-recommended) follows a strict lifecycle involving two statuses:

1. Staging Status

When a rule is first created or recommended, it enters Staging mode.

- Passive Execution: The rule runs against your live data flow but does not perform any actual transformations or deletions.

- Statistics Collection: Realm collects telemetry on how the rule would have performed.

- Validation: This allows you to review the exact events that would be discarded or deduplicated before committing to the change.

2. Production Status

To begin actively transforming data and reducing costs, a user must explicitly Deploy the rule into Production.

- Active Transformation: Only Production rules actively modify the data stream.

- Real-Time Impact: Once deployed, the "Deleted Events" metrics will begin reflecting the actual volume removed from your downstream ingestion.

The Reduction Flow

Data flows through a multi-stage filtering process. Every event is evaluated against your specific business and security needs through a sequential "filtering lens" approach.

1. Exceptions

Before any data is discarded or modified, it passes through the Exceptions layer. This is the "Safe Harbor" for your most critical data.

- Logic: Events that match defined exception rules bypass all further reduction steps.

- Purpose: To ensure that mission-critical threat telemetry is never accidentally discarded.

- Flow: Events matching exceptions move directly to the Output Feed.

2. Reduction: Discard

Events that do not match an exception rule proceed to the Discard stage. This is the primary method for high-volume noise reduction.

- Event Discard: You can define rules to drop specific event types that offer no forensic or detection value (e.g., routine internal health checks or benign "allow" heartbeats).

- Top DNS Discard: A specialized transformation that automatically identifies and targets high-volume, repetitive DNS noise. DNS logs are often the largest contributor to "benign bulk," and this feature allows you to prune them without losing unique signals.

- Outcome: Events matching these rules are not routed toward high cost ingestion tools, but routed to a lower cost forensics archive like the Data Haven.

3. Reduction: Deduplication

The final stage focuses on mathematical efficiency by removing redundant information.

- Logic: The engine identifies duplicate events that occur within a specific time window and share identical key attributes.

- Process: For redundant data, the "clones" are suppressed, while the unique event is passed forward.

- Outcome: Duplicate events are consolidated, drastically lowering your storage footprint without losing the core forensic signal.

Reduction Logic Summary

| Feature | Action | Use Case |

|---|---|---|

| Exceptions | Bypass | Protect critical logs (e.g., Domain Controllers, Executive endpoints) from being filtered. |

| Discard | Delete/Route | Eliminate "noisy" logs like routine health checks or known-safe network traffic. |

| Top DNS Discard | Delete/Route | Targeted removal of high-frequency, low-value DNS noise to save massive SIEM bandwidth. |

| Deduplication | Consolidate | Prevent the same event from being recorded hundreds of times (e.g., repeating system errors). |

Operational Impact

Operational Benefits

- Cost Optimization: Drastically lower licensing costs for ingestion-based SIEMs (often reducing volumes by 50%–80%).

- Improved Search Performance: Faster query times in your SIEM due to a cleaner, more relevant data set.

- Analyst Clarity: Reduced "alert fatigue" as analysts no longer have to sift through billions of lines of benign chatter.

Best Practices

We want you to feel confident and empowered as you optimize your data flow! Here are our favorite tips for a smooth and successful reduction journey:

- Prioritize Your Must Haves: Start by promoting Exceptions for your "must-have" data sources into Production.

- Embrace Staging: Think of Staging Mode as your risk-free sandbox. Letting a rule run for 24–48 hours is a great way to see the "magic" happen behind the scenes without any pressure. It’s the best way to ensure your rules are perfectly tuned to your needs.

- Visualize The Reduction Impact: Use the reduction insights to show your team the tangible value you're bringing to the SOC across your log reduction rules.